Big Data—most companies have already embraced it. They’ve equipped themselves with the necessary technologies to collect massive streams of data in real time. However, that’s no longer where the competition lies. The real game now is in a company’s ability to exploit and extract value from the data it collects. Data quality has become a central and obvious concern. Yet, putting it into practice remains far too random and often unsatisfactory...

Just because it’s possible doesn’t mean it should be done!

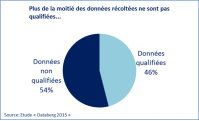

Big Data enables the collection of all kinds of data. But are they all truly useful? No!

Before collecting data left and right, it's essential to define what is actually needed.

If I go to the supermarket without a shopping list, I might be tempted by all kinds of products. When I get home, even if my cupboards are full, I still may not have what I need. A company equipped with Big Data technology is like someone shopping without a list. Before diving into the Big Data world, it’s crucial to determine a strategy and clearly identify the need.

That way, we can both qualify the data and avoid the pitfall of indiscriminate data collection, which only clutters up our precious storage space.

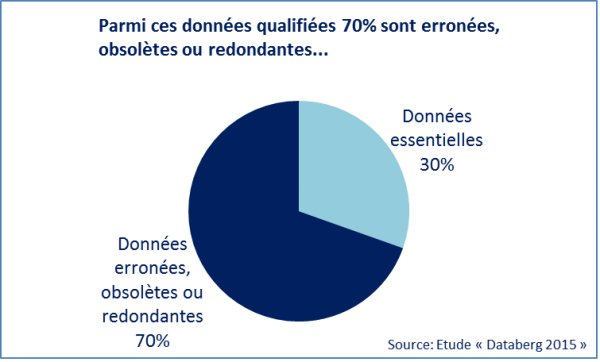

Quality over quantity

Once the need is identified, it’s important to implement tools and methods to collect quality data. Did you know that a quarter of the data collected by companies is inaccurate[1]? Take, for example, a company trying to measure its popularity on social media. If I’m the company Mars (famous for its chocolate bars), when I analyze posts referring to my brand, I need to be careful not to include the many posts about Bruno Mars, the American singer. Let’s not forget that data analysis is increasingly the foundation for operational and strategic decision-making. Decision-makers must be able to trust the information. It’s therefore better to prioritize data quality over quantity.

Data quality also depends on how it’s processed. To avoid duplication, standardization is key. Products, services, or locations can have multiple names. For example, the city of Paris has many nicknames—“City of Light” or “Paname,” to name just two. Treated separately, these could skew analysis results.

[1] The State of Data Quality, Experian Information Solutions, Inc.

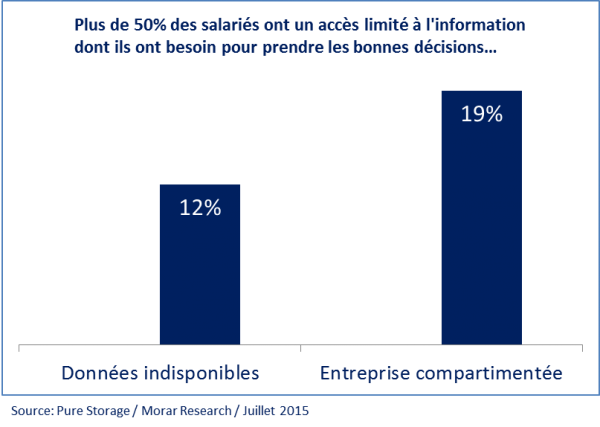

Centralize and share data!

More than 50% of employees report having limited access to the information they need to make sound decisions. It’s not just about collecting the right data; it also needs to be shared across the organization. Internally, it’s important to not only gather information but to do so in a uniform and automated way to ensure accuracy. Data must be centralized and consistent to avoid contradictory, outdated, or duplicate information.

A constantly updated, shared internal data inventory provides that assurance. It becomes a trusted reference that limits misinterpretation.

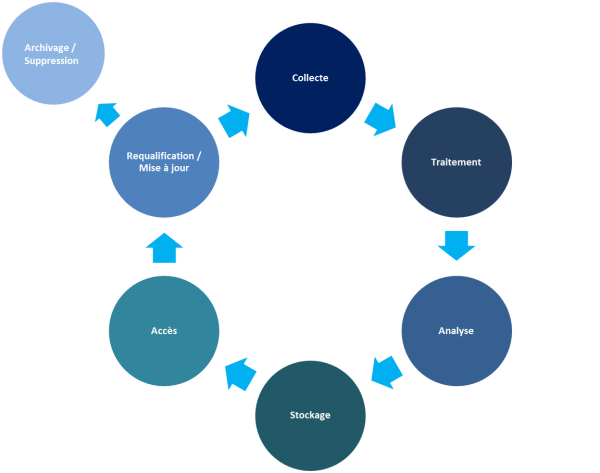

Manage the data lifecycle

Finally, data is not permanent—what’s relevant today may not be tomorrow.

This is why managing the data lifecycle is essential. Few companies adopt a strategy to review, archive, and delete their data. Yet, this is crucial for maintaining a healthy and sustainable database. Regular data reviews help ensure relevance, allow requalification, or trigger updates. This prevents the storage of outdated or incorrect information. The choice between archiving or deleting is up to the company. Deletion must be carefully considered to avoid losing sensitive data—whether due to regulatory requirements or to ensure data security.

These basic principles allow companies to regain control over their information. A strong data management strategy increases visibility, helps protect and leverage data, and supports decision-making. Mastering data is a prerequisite for Big Data to live up to its promises—delivering customer insights necessary to understand and anticipate their needs and improve customer experience.